NVIDIA CUDA AI Assistant |

AI for CUDA GPU Programming

Transform your GPU programming with AI-powered NVIDIA CUDA development. Generate GPU kernels, parallel algorithms, and high-performance computing code faster with intelligent assistance for CUDA programming and optimization.

Trusted by GPU programmers and HPC developers • Free to start

Why Use AI for CUDA Development?

CUDA programming requires understanding GPU architecture and parallel algorithms. Our AI accelerates your development

GPU Kernel Development

Generate CUDA kernels for parallel computation with proper thread organization and memory management

Parallel Algorithms

Implement parallel algorithms for matrix operations, sorting, reduction, and numerical computations

Memory Management

Optimize GPU memory usage with shared memory, global memory, and efficient data transfer patterns

Performance Optimization

Optimize CUDA code for maximum GPU utilization, occupancy, and memory bandwidth efficiency

CUDA Libraries

Integrate with cuBLAS, cuDNN, cuFFT, and other CUDA libraries for optimized computations

Debugging & Profiling

Debug CUDA kernels and profile performance with proper error handling and optimization techniques

Frequently Asked Questions

What is NVIDIA CUDA and how is it used in GPU programming?

NVIDIA CUDA (Compute Unified Device Architecture) is a parallel computing platform and programming model for NVIDIA GPUs. CUDA enables: high-performance parallel computing on GPUs, massive parallel processing for scientific computing, machine learning and AI acceleration, graphics rendering and ray tracing, cryptocurrency mining, and data processing. CUDA programming uses C/C++ extensions to write kernels that execute on GPU cores, enabling applications to leverage thousands of parallel threads for computational tasks. It's essential for deep learning frameworks (TensorFlow, PyTorch), scientific simulations, and high-performance computing applications.

How does the AI help with CUDA kernel development?

The AI generates CUDA kernel code including: kernel function definitions with proper CUDA syntax, thread indexing and block organization, memory allocation and data transfer (cudaMalloc, cudaMemcpy), shared memory usage for optimization, synchronization points (__syncthreads()), error handling and CUDA API calls, and kernel launch configurations (grid and block dimensions). It follows CUDA best practices and generates optimized, efficient GPU code.

Can it optimize CUDA code for performance and memory efficiency?

Yes! The AI optimizes CUDA code by: maximizing GPU occupancy with proper thread block sizing, optimizing memory access patterns for coalesced access, using shared memory for frequently accessed data, implementing efficient reduction algorithms, minimizing memory transfers between host and device, optimizing loop unrolling and vectorization, and suggesting CUDA library usage (cuBLAS, cuDNN) for common operations. It generates high-performance CUDA code following optimization best practices.

Does it support CUDA libraries and frameworks integration?

Absolutely! The AI understands CUDA ecosystem integration including: cuBLAS for linear algebra operations, cuDNN for deep learning primitives, cuFFT for Fast Fourier Transform, Thrust for parallel algorithms and data structures, NCCL for multi-GPU communication, and integration with deep learning frameworks (TensorFlow, PyTorch, Caffe). It generates code that leverages these libraries for optimal performance and follows CUDA ecosystem best practices.

Start Programming GPUs with AI

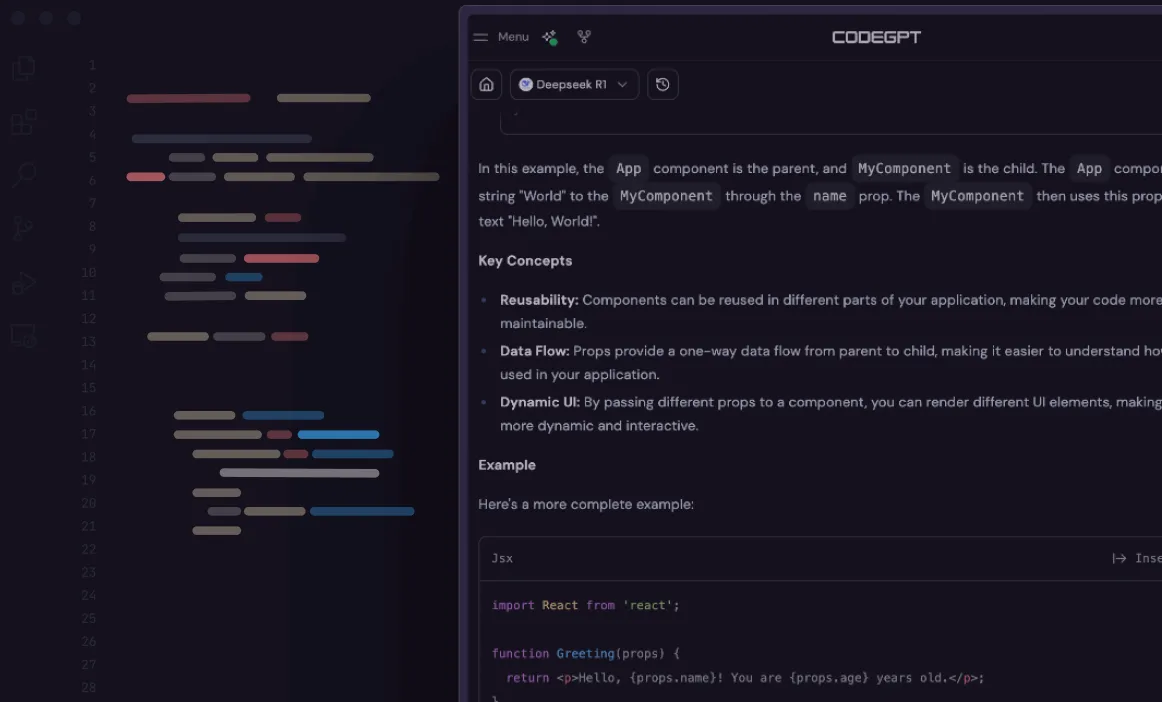

Download CodeGPT and accelerate your CUDA development with intelligent GPU programming assistance

Download VS Code ExtensionFree to start • No credit card required

HPC Development Services?

Let's discuss custom GPU solutions, parallel computing, and high-performance computing for your projects

Talk to Our TeamCustom GPU solutions • HPC consulting